Part 1: HPC fundamentals

Overview of HPC at USC

Hello world

For a quick-n-dirty intro to Slurm, we will start with a simple “Hello world” using Slurm + R. For this, we need to go through the following steps:

Copy a Slurm script to HPC,

Logging to HPC, and

Submit the job using

sbatch.

Step 1: Copy the Slurm script to HPC

We need to copy the following Slurm script to HPC (00-hello-world.slurm):

#!/bin/sh

#SBATCH --output=00-hello-world.out

module load usc r

Rscript -e "paste('Hello from node', Sys.getenv('SLURMD_NODENAME'))"Which has four lines:

#!/bin/shthe shebang (shewhat?)#SBATCH --output=00-hello-world.outan option to be passed tosbatch, in this case, the name of the output file to which stdout and stderr will go.module load usc ruses Lmod to load theusc(required) andRmodules.Rscript ...a call to R to evaluate the expressionpaste(...). This will get the environment variableSLURMD_NODENAME(whichsbatchcreates) and print it on a message.

To do so, we will use Secure copy protocol (scp), which allows us to copy data to and fro computers. In this case, we should do something like the following

scp 00-hello-world.slurm vegayon@hpc-transfer1.usc.edu:/home1/vegayon/In words, "Using the username vegayon, connect to hpc-transfer1.usc.edu, take the file 00-hello-world.slurm and copy it to /home1/vegayon/. With the file now available in the cluster, we can submit this job using Slurm.

Step 2: Logging to HPC

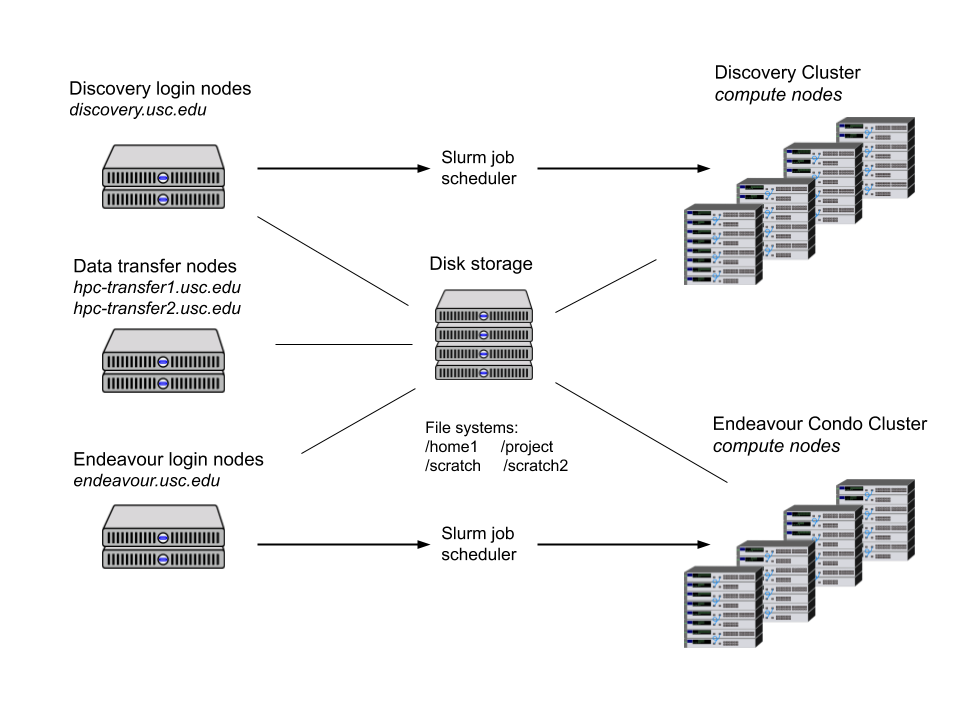

Log-in using ssh. In the case of Windows users, download the Putty client. You have two options, the discovery or endeavour clusters.

To login, you will need to use your USC Net ID. If your USC email is

flasname@usc.edu, your USC Net ID isflastname. Then:ssh flastname@discovery.usc.eduif you want to use the discovery cluster (available to all USC members), or

ssh flastname@endeavour.usc.eduif you want to use the endeavour cluster (using private condos).

Step 3: Submitting the job

Overall, there are two ways to use the compute nodes: interactively (salloc) and in batch mode (sbatch). In this case, since we have a Slurm script, we will use the latter.

To submit the job, we can simple type the following:

sbatch 00-hello-world.slurmAnd that’s it!

In the case of interactive sessions, You can start one using the salloc command. For example, if you wanted to run R with 8 cores, using 16 Gigs of memory in total, you would need to do the following:

salloc -n1 --cpus-per-task=8 --mem-per-cpu=2G --time=01:00:00Once your request is submitted, you will get access to a compute node. Within it, you can load the required modules and start R:

module load usc r

RInteractive sessions are not recommended for long jobs. Instead, use this resource if you need to inspect some large dataset, debug your code, etc.