6 Profiling and benchmarking

6.1 Introduction

This chapter will introduce the the concepts of profiling and benchmarking. These concepts are universal withing programming . This chapter will focus on its practical implementation and usage in the R programming language.

The overall goal of these techniques is to measure the performance of the code you have written. Remember this is a measure of speed, not a measure of correctness.

6.2 Profiling

Profiling is the act of measuring the run-time of each line of code you have run. Knowing where the time is being spend in your code is beneficial as it is a good indication of where you should spend your time optimizing. In general you want to look for small areas that take up most of the time (also called a “bottleneck”) and focus on those before other parts. There is little reason to spend time optimizing a piece of code that only take up 0.1% of the time when you could work at a piece that takes up 70% of the time.

We will use the the profvis package to do profiling. It have a couple of different ways of interacting. In the first one you load the profvis package and then you wrap the code you want to profile in profvis({ and }) as shown below show below.

library(profvis)

profvis({

data <- runif(1e7)

# Three different ways of getting the square root

square_root <- sqrt(data)

square_root <- data ^ (1/2)

square_root <- exp(1) ^ (1/2 * log(data))

})Another way if you are using the Rstudio IDE, comes from the navigation bar where you can access the profiling tool.

Figure 6.1: Profile location in Rstudio IDE navigation bar.

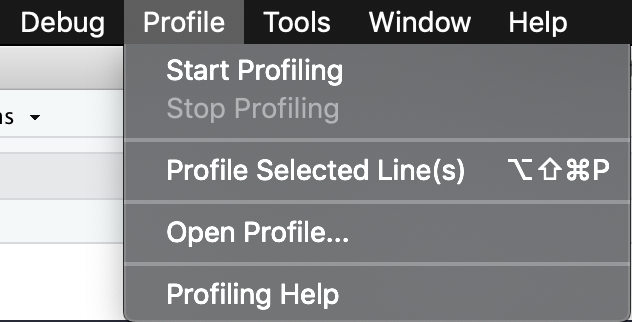

Clicking this tab reveals the following actions:

- “Profile selected line(s)”

- “Start profiling” & “Stop profiling”

Figure 6.2: Profiling actions.

Being able to profile selected lines of code is great if you have a short and compact piece of code that easily can be highlighted and tested.

On the other hand is the ability start and stop the profiling whenever you want a powerful tool. In addition to being able to profile code from different areas, you are also able to stop profiling before the code is done executing, which you aren’t able to in the previous 2 methods. This is useful if you want to profile a snapshot of a long-running simulation as it can have very consistent behavior since it is running the same thing millions of time.

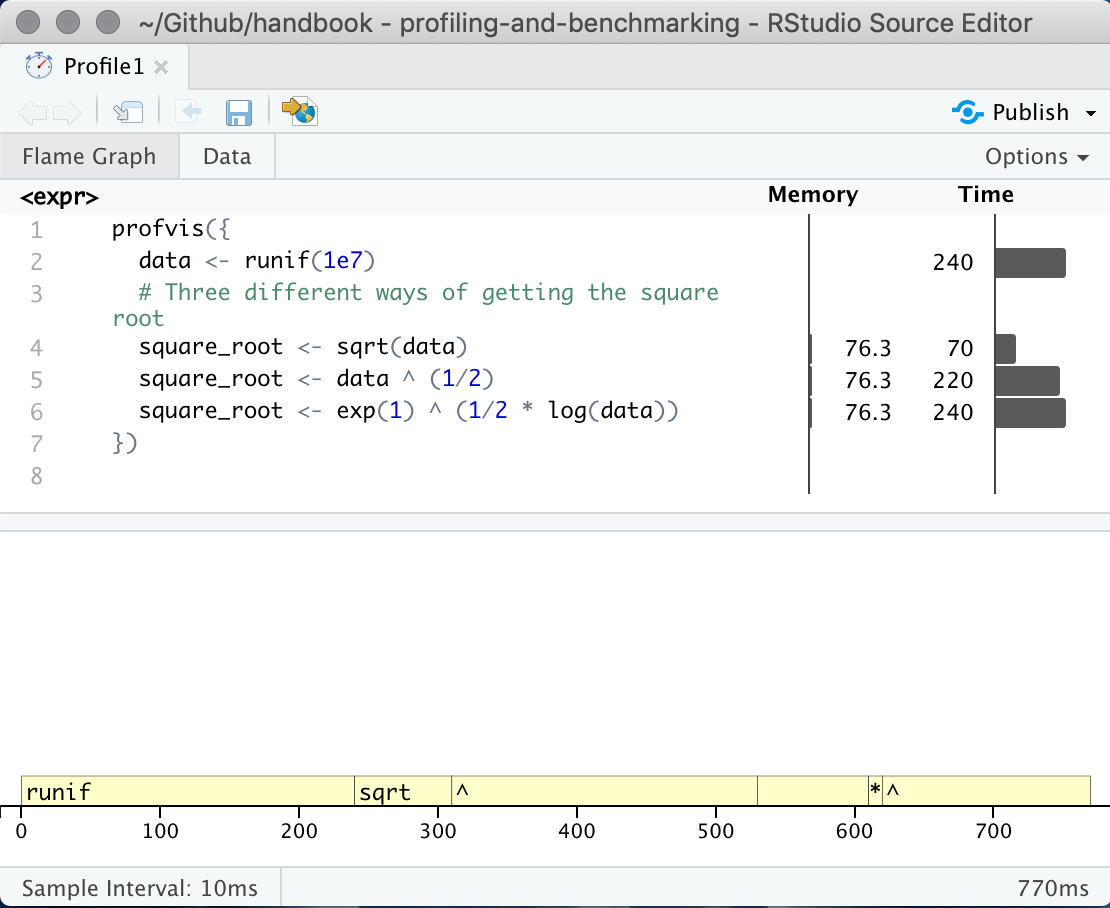

No matter which of the three way you do your profile you will be presented with a page with a frame-graph

Figure 6.3: profvis output showing source on top and flame graph below.

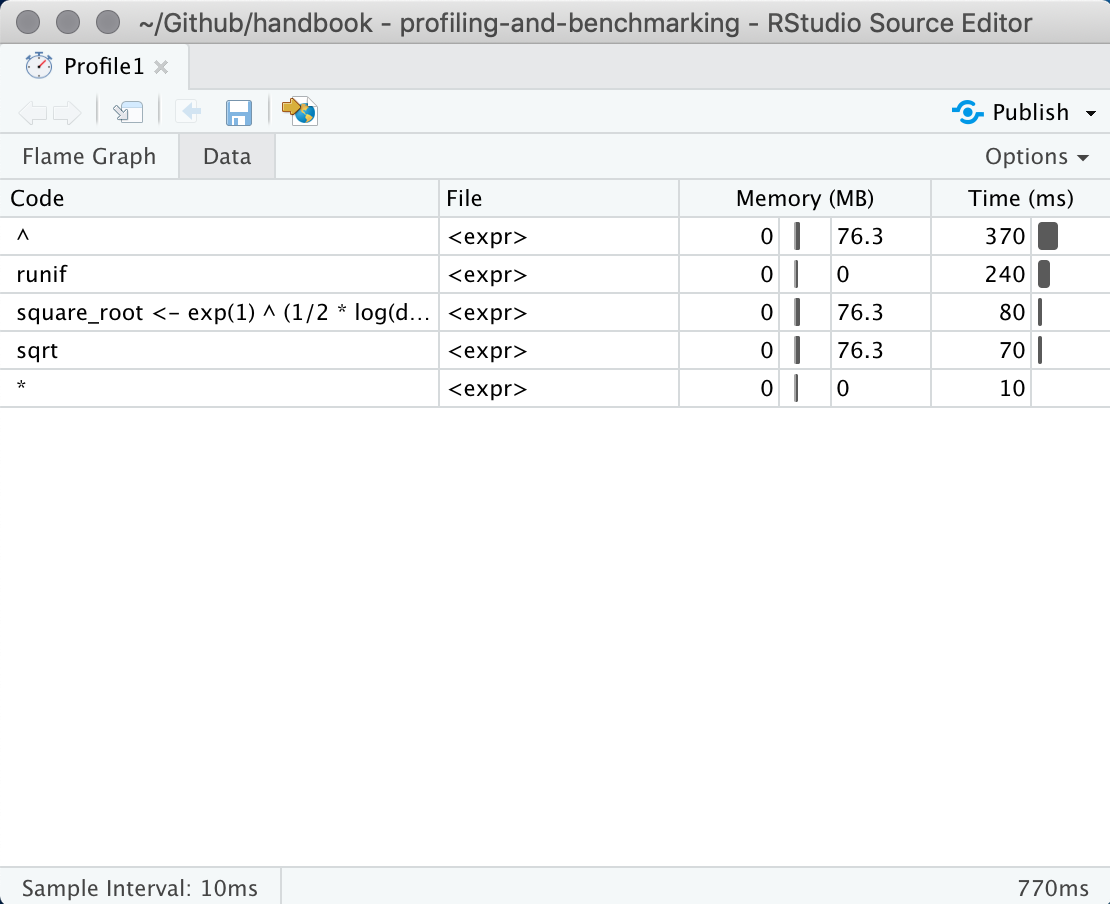

This interactive panel shows how much time and memory is being spend on each line of code. From here you should be able to identify Another useful view can be found by clicking on the “data” tab at the top. This shows how long time is being spend in each expression. We can see in this example that the power operator ^ is taking the majority of the time.

Figure 6.4: profvis data view showcases results by expresion in stead of by line.

6.2.1 Troubleshooting

Sometimes when you are using profvis you will see the error

Error in parse_rprof(prof_output, expr_source) :

No parsing data available. Maybe your function was too fast?This is because your code finished running before profvis was able to detect it. This might feel like good news, but it can make it difficult to profile very fast functions. To profile a fast function you simply let it run a lot of times. This can easily be done by putting it inside a for-loop. You change this

profvis({

data <- c(3, 7, 2)

super_fast_function(data)

})to

profvis({

data <- c(3, 7, 2)

for (i in 1:1000) {

super_fast_function(data)

}

})where you increase the number until it is run enough for the profiler to catch it.

6.3 Benchmarking

Measuring how long something takes is a simple skill that will become invaluable once you start to focus on making your code faster. Simply put, if you can’t measure how fast something is you don’t know if it is going any faster. This section will be broken into 2 sections

- benchmarking slow code and,

- benchmarking fast code.

In this content slow is something that takes seconds, minutes, hours or more. It is a situation where you could use a conventional stopwatch. Fast is anything faster, it is used in the context where you have two pieces of code you think does the same and you want to find out which one is faster.

6.3.1 Slow code

First we need to create a function to benchmark, here we will use this simple recursive formula for the fibonacci sequence. This function doesn’t scale well with n so it will be perfect for these examples.

fibonacci <- function(n) {

if(n == 0) {

return(0)

}

if(n == 1) {

return(1)

}

fibonacci(n - 1) + fibonacci(n - 2)

}Using system.time() is classic way to measure how long something takes, simple wrap the code you want to time between system.time({ and }).

system.time({

fibonacci(32)

})## user system elapsed

## 2.740 0.000 2.744The first two numbers are the are the total user and system CPU times of the current R process and any child processes on which it has waited, and the third entry is the ‘real’ elapsed time since the process was started. An alternative with the same functionality from the bench package is the function system_time().

library(bench)

system_time({

fibonacci(32)

})## process real

## 2.67s 2.67swhere the two values are

- process - The process CPU usage of the expression evaluation.

- real - The wall clock time of the expression evaluation.

Another great tool is the tictoc package. Simply call tic() when to start recording and toc() to end recording.

library(tictoc)

tic()

x <- fibonacci(32)

toc()## 2.808 sec elapsedIn addition does this package extend the timing functionality in such a way that we are able to measure times in nested context. In the following example we are generating some data and fitting a model. Calling tic() another time before the toc() allows us to measure subsections of the whole.

library(tictoc)

tic("Total")

tic("Data Generation")

X <- matrix(rnorm(5000 * 1000), 5000, 1000)

b <- sample(1:1000, 1000)

y <- runif(1) + X %*% b + rnorm(5000)

toc()

tic("Model Fitting")

model <- lm(y ~ X)

toc()

toc()## Data Generation: 0.407 sec elapsed

## Model Fitting: 4.796 sec elapsed

## Total: 5.206 sec elapsedThis can be useful if you want to be able to time the overall script as well as parts of it. Notice how each timing is named.

6.3.2 Fast code - microbenchmarking

Here we will look at the what happens when we want to compare two expressions to see which one is faster. We will use the bench package again. Suppose we would like to determine the fastest way of calculating the variance of a selection of numbers. We use the mark() function from the bench and insert 2 or more expressions we would like to test against each other. These expressions are then run a lot of times and the summary statistics of the times are given as a result.

library(bench)

x <- rnorm(1000)

bench::mark(

var(x),

cov(x, x)

)## # A tibble: 2 x 10

## expression min mean median max `itr/sec` mem_alloc n_gc n_itr

## <chr> <bch:> <bch:> <bch:> <bch:t> <dbl> <bch:byt> <dbl> <int>

## 1 var(x) 9.5µs 11.9µs 10.7µs 103.2µs 83963. 13.7KB 1 9999

## 2 cov(x, x) 19.5µs 22.1µs 21.5µs 77.9µs 45231. 47.6KB 4 9996

## # … with 1 more variable: total_time <bch:tm>mark() also checks that all the expressions return the same output as a sanity check. Notice the units

- 1 ms, then one thousand calls takes a second.

- 1 µs, then one million calls takes a second.

- 1 ns, then one billion calls takes a second.

6.4 Additional resources

https://adv-r.hadley.nz/perf-measure.html

Chapter on “Measuring performance” from Advanced R by Hadley Wickham. Covers more or less the same topics as this chapter but with more examples and greater details, great next step for reading.