A flexible implementation of the Metropolis-Hastings MCMC algorithm, users can utilize arbitrary transition kernels as well as set-up an automatic stop criterion using a convergence check test.

MCMC(

initial,

fun,

nsteps,

...,

seed = NULL,

nchains = 1L,

burnin = 0L,

thin = 1L,

kernel = kernel_normal(),

multicore = FALSE,

conv_checker = NULL,

cl = NULL,

progress = interactive() && !multicore,

chain_id = 1L

)

MCMC_without_conv_checker(

initial,

fun,

nsteps,

...,

nchains = 1L,

burnin = 0L,

thin = 1L,

kernel = kernel_normal(),

multicore = FALSE,

conv_checker = NULL,

cl = NULL,

progress = interactive() && !multicore,

chain_id = 1L

)

MCMC_OUTPUTArguments

- initial

Either a numeric matrix or vector, or an object of class coda::mcmc or coda::mcmc.list (see details). initial values of the parameters for each chain (See details).

- fun

A function. Returns the log-likelihood.

- nsteps

Integer scalar. Length of each chain.

- ...

Further arguments passed to

fun.- seed

If not null, passed to set.seed.

- nchains

Integer scalar. Number of chains to run (in parallel).

- burnin

Integer scalar. Length of burn-in. Passed to coda::mcmc as

start.- thin

Integer scalar. Passed to coda::mcmc.

- kernel

An object of class fmcmc_kernel.

- multicore

Logical. If

FALSEthen chains will be executed in serial.- conv_checker

A function that receives an object of class coda::mcmc.list, and returns a logical value with

TRUEindicating convergence. See the "Automatic stop" section and the convergence-checker manual.- cl

A

clusterobject passed to parallel::clusterApply.- progress

Logical scalar. When set to

TRUEshows a progress bar. A new bar will be show every time that the convergence checker is called.- chain_id

Integer scalar (internal use only). This is an argument passed to the kernel function and it allows it identify in which of the chains the process is taking place. This could be relevant for some kernels (see

kernel_new()).

Value

MCMC returns an object of class coda::mcmc from the coda

package. The mcmc object is a matrix with one column per parameter,

and nsteps rows. If nchains > 1, then it returns a coda::mcmc.list.

While the main output of MCMC is the mcmc(.list) object, other information

and intermediate outputs of the process are stored in MCMC_OUTPUT. For information

about how to retrieve/set data, see mcmc-output.

Details

This function implements Markov Chain Monte Carlo (MCMC) using the Metropolis-Hastings ratio with flexible transition kernels. Users can specify either one of the available transition kernels or define one of their own (see kernels). Furthermore, it allows easy parallel implementation running multiple chains in parallel. The function also allows using convergence diagnostics tests to set-up a criterion for automatically stopping the algorithm (see convergence-checker).

The canonical form of the Metropolis Hastings algorithm consists on accepting a move from state \(x\) to state \(y\) based on the Hastings ratio \(r(x,y)\):

$$% r(x,y) = \frac{h(y)q(y,x)}{h(x)q(x,y)},% $$

where \(h\) is the unnormalized density of the specified distribution ( the posterior probability), and \(q\) has the conditional probability of moving from state \(x\) to \(y\) (the proposal density). The move \(x \to y\) is then accepted with probability

$$% \alpha(x,y) = \min\left(1, r(x,y)\right)% $$

Observe that, in the case that \(q()\) is symmetric, meaning \(q(x, y) = q(y, x)\),

the Hastings ration reduces to \(h(y)/h(x)\). Starting version 0.5-0, the value

of the log unnormalized density and the proposed states y can be accessed using

the functions get_logpost() and get_draws().

We now give details of the various options included in the function.

The function MCMC_without_conv_checker is for internal use

only.

Starting point

By default, if initial is of class mcmc, MCMC will take the last nchains

points from the chain as starting point for the new sequence. If initial is

of class mcmc.list, the number of chains in initial must match the nchains

parameter.

If initial is a vector, then it must be of length equal to the number of

parameters used in the model. When using multiple chains, if initial is not

an object of class mcmc or mcmc.list, then it must be a numeric matrix

with as many rows as chains, and as many columns as parameters in the model.

Multiple chains

When nchains > 1, the function will run multiple chains. Furthermore,

if cl is not passed, MCMC will create a PSOCK cluster

using makePSOCKcluster with

parallel::detectCores

clusters and attempt to execute using multiple cores. Internally, the function does

the following:

# Creating the cluster

ncores <- parallel::detectCores()

ncores <- ifelse(nchains < ncores, nchains, ncores)

cl <- parallel::makePSOCKcluster(ncores)

# Loading the package and setting the seed using clusterRNGStream

invisible(parallel::clusterEvalQ(cl, library(fmcmc)))

parallel::clusterSetRNGStream(cl, .Random.seed)When running in parallel, objects that are

used within fun must be passed through ..., otherwise the cluster

will return with an error.

The user controls the initial value of the parameters of the MCMC algorithm

using the argument initial. When using multiple chains, i.e., nchains > 1,

the user can specify multiple starting points, which is recommended. In such a

case, each row of initial is use as a starting point for each of the

chains. If initial is a vector and nchains > 1, the value is recycled, so

all chains start from the same point (not recommended, the function throws a

warning message).

Automatic stop

By default, no automatic stop is implemented. If one of the functions in

convergence-checker is used, then the MCMC is done by bulks as specified

by the convergence checker function, and thus the algorithm will stop if,

the conv_checker returns TRUE. For more information see convergence-checker.

References

Brooks, S., Gelman, A., Jones, G. L., & Meng, X. L. (2011). Handbook of Markov Chain Monte Carlo. Handbook of Markov Chain Monte Carlo.

Vega Yon, G., & Marjoram, P. (2019). fmcmc: A friendly MCMC framework. Journal of Open Source Software, 4(39), 1427. doi:10.21105/joss.01427

See also

get_logpost(), get_logpost() (mcmc-output) for post execution of MCMC, and

ith_step() for accessing objects within an MCMC call.

Examples

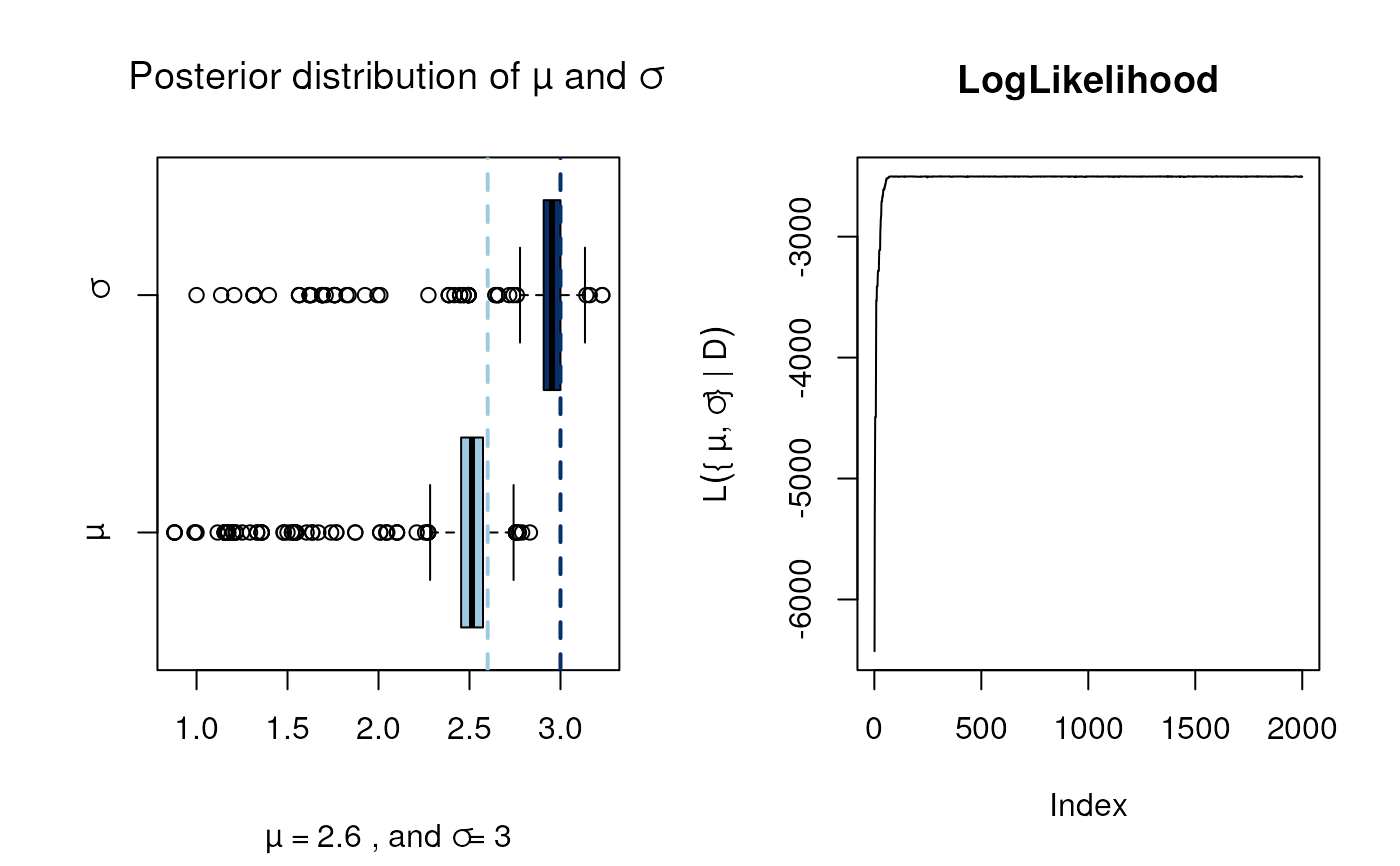

# Univariate distributed data with multiple parameters ----------------------

# Parameters

set.seed(1231)

n <- 1e3

pars <- c(mean = 2.6, sd = 3)

# Generating data and writing the log likelihood function

D <- rnorm(n, pars[1], pars[2])

fun <- function(x) {

x <- log(dnorm(D, x[1], x[2]))

sum(x)

}

# Calling MCMC, but first, loading the coda R package for

# diagnostics

library(coda)

ans <- MCMC(

fun, initial = c(mu=1, sigma=1), nsteps = 2e3,

kernel = kernel_normal_reflective(scale = .1, ub = 10, lb = 0)

)

# Ploting the output

oldpar <- par(no.readonly = TRUE)

par(mfrow = c(1,2))

boxplot(as.matrix(ans),

main = expression("Posterior distribution of"~mu~and~sigma),

names = expression(mu, sigma), horizontal = TRUE,

col = blues9[c(4,9)],

sub = bquote(mu == .(pars[1])~", and"~sigma == .(pars[2]))

)

abline(v = pars, col = blues9[c(4,9)], lwd = 2, lty = 2)

plot(apply(as.matrix(ans), 1, fun), type = "l",

main = "LogLikelihood",

ylab = expression(L("{"~mu,sigma~"}"~"|"~D))

)

par(oldpar)

# In this example we estimate the parameter for a dataset with ----------------

# With 5,000 draws from a MVN() with parameters M and S.

# \donttest{

# Loading the required packages

library(mvtnorm)

#> Error in library(mvtnorm): there is no package called ‘mvtnorm’

library(coda)

# Parameters and data simulation

S <- cbind(c(.8, .2), c(.2, 1))

M <- c(0, 1)

set.seed(123)

D <- rmvnorm(5e3, mean = M, sigma = S)

#> Error in rmvnorm(5000, mean = M, sigma = S): could not find function "rmvnorm"

# Function to pass to MCMC

fun <- function(pars) {

# Putting the parameters in a sensible way

m <- pars[1:2]

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(dmvnorm(D, m, s)))

}

# Calling MCMC

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2

)

#> Error in dmvnorm(D, m, s): could not find function "dmvnorm"

# Checking out the outcomes

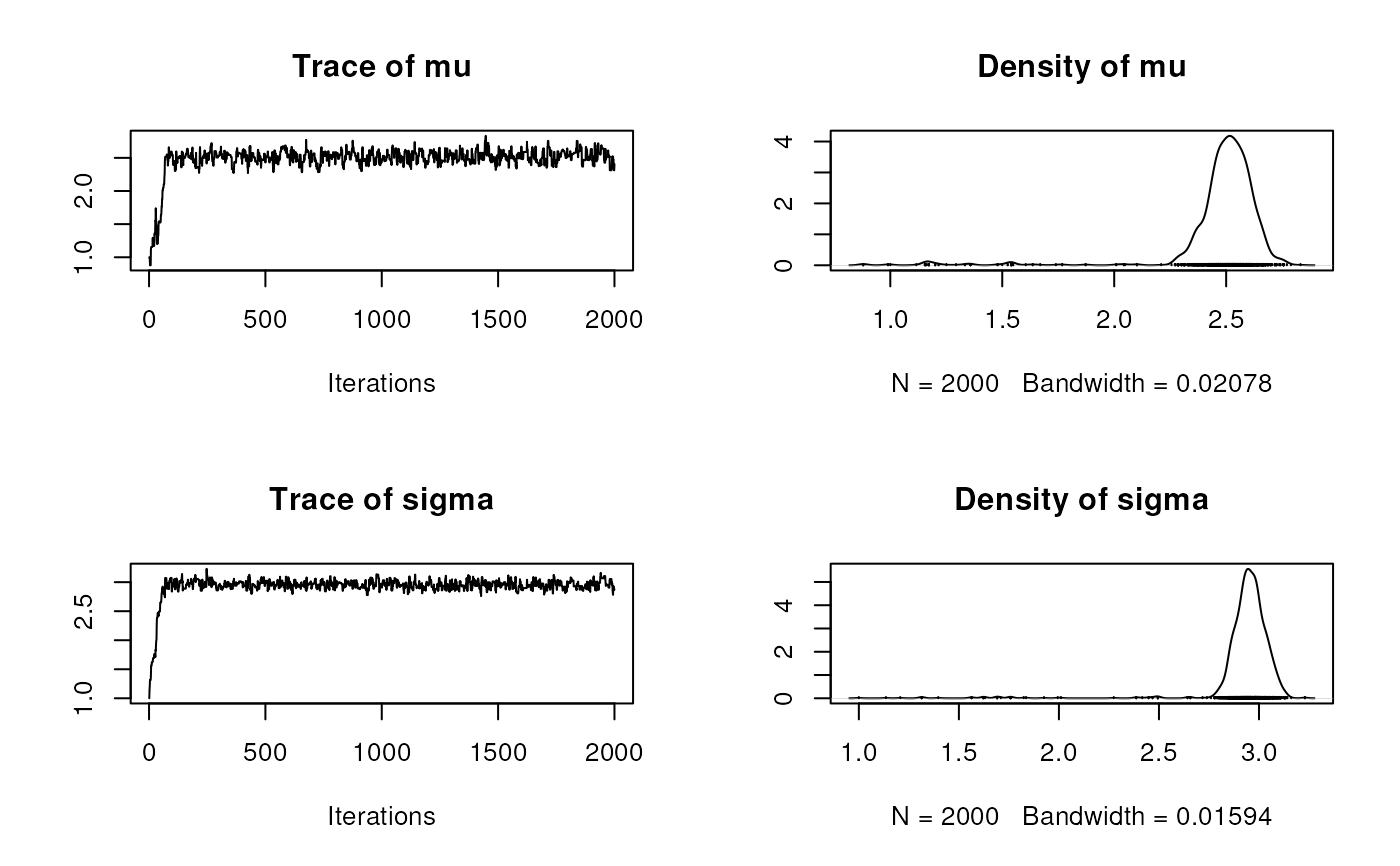

plot(ans)

par(oldpar)

# In this example we estimate the parameter for a dataset with ----------------

# With 5,000 draws from a MVN() with parameters M and S.

# \donttest{

# Loading the required packages

library(mvtnorm)

#> Error in library(mvtnorm): there is no package called ‘mvtnorm’

library(coda)

# Parameters and data simulation

S <- cbind(c(.8, .2), c(.2, 1))

M <- c(0, 1)

set.seed(123)

D <- rmvnorm(5e3, mean = M, sigma = S)

#> Error in rmvnorm(5000, mean = M, sigma = S): could not find function "rmvnorm"

# Function to pass to MCMC

fun <- function(pars) {

# Putting the parameters in a sensible way

m <- pars[1:2]

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(dmvnorm(D, m, s)))

}

# Calling MCMC

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2

)

#> Error in dmvnorm(D, m, s): could not find function "dmvnorm"

# Checking out the outcomes

plot(ans)

summary(ans)

#>

#> Iterations = 1:2000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 2000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> mu 2.481 0.2206 0.004934 0.04068

#> sigma 2.931 0.1908 0.004267 0.03238

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> mu 1.636 2.454 2.514 2.574 2.676

#> sigma 2.657 2.908 2.953 3.000 3.095

#>

# Multiple chains -----------------------------------------------------------

# As we want to run -fun- in multiple cores, we have to

# pass -D- explicitly (unless using Fork Clusters)

# just like specifying that we are calling a function from the

# -mvtnorm- package.

fun <- function(pars, D) {

# Putting the parameters in a sensible way

m <- pars[1:2]

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(mvtnorm::dmvnorm(D, m, s)))

}

# Two chains

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

nchains = 2,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D

)

#> Warning: While using multiple chains, a single initial point has been passed via `initial`: c(5, 5, 5, 0, 5). The values will be recycled. Ideally you would want to start each chain from different locations.

#> Error in loadNamespace(x): there is no package called ‘mvtnorm’

summary(ans)

#>

#> Iterations = 1:2000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 2000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> mu 2.481 0.2206 0.004934 0.04068

#> sigma 2.931 0.1908 0.004267 0.03238

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> mu 1.636 2.454 2.514 2.574 2.676

#> sigma 2.657 2.908 2.953 3.000 3.095

#>

# }

# Example using a user-defined cl object -------------------------------------

if (FALSE) {

# A silly function that gets the first two parameters of the

# vector. Using the default multicore example will cause and error

get_m <- function(pars) {

pars[1:2]

}

fun <- function(pars, D) {

# Putting the parameters in a sensible way

m <- get_m(pars)

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(mvtnorm::dmvnorm(D, m, s)))

}

# This will fail with the error

# Error in checkForRemoteErrors(val) :

# 4 nodes produced errors; first error: could not find function "get_m"

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D,

# Multiple chains using parallel computing

multicore = TRUE,

nchains = 4

)

summary(ans)

# To solve the error, we need to build the cluster object

library(parallel)

cl <- makePSOCKcluster(4)

# Export the function `get_m` to the cluster. The function `fun` and data `D`

# are automatically exported by the `MCMC` function.

clusterExport(cl, "get_m")

# Run the MCMC

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D,

# Multiple chains using parallel computing

multicore = TRUE,

nchains = 4,

# Use the cluster object

cl = cl

)

summary(ans)

}

summary(ans)

#>

#> Iterations = 1:2000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 2000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> mu 2.481 0.2206 0.004934 0.04068

#> sigma 2.931 0.1908 0.004267 0.03238

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> mu 1.636 2.454 2.514 2.574 2.676

#> sigma 2.657 2.908 2.953 3.000 3.095

#>

# Multiple chains -----------------------------------------------------------

# As we want to run -fun- in multiple cores, we have to

# pass -D- explicitly (unless using Fork Clusters)

# just like specifying that we are calling a function from the

# -mvtnorm- package.

fun <- function(pars, D) {

# Putting the parameters in a sensible way

m <- pars[1:2]

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(mvtnorm::dmvnorm(D, m, s)))

}

# Two chains

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

nchains = 2,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D

)

#> Warning: While using multiple chains, a single initial point has been passed via `initial`: c(5, 5, 5, 0, 5). The values will be recycled. Ideally you would want to start each chain from different locations.

#> Error in loadNamespace(x): there is no package called ‘mvtnorm’

summary(ans)

#>

#> Iterations = 1:2000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 2000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> mu 2.481 0.2206 0.004934 0.04068

#> sigma 2.931 0.1908 0.004267 0.03238

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> mu 1.636 2.454 2.514 2.574 2.676

#> sigma 2.657 2.908 2.953 3.000 3.095

#>

# }

# Example using a user-defined cl object -------------------------------------

if (FALSE) {

# A silly function that gets the first two parameters of the

# vector. Using the default multicore example will cause and error

get_m <- function(pars) {

pars[1:2]

}

fun <- function(pars, D) {

# Putting the parameters in a sensible way

m <- get_m(pars)

s <- cbind( c(pars[3], pars[4]), c(pars[4], pars[5]) )

# Computing the unnormalized log likelihood

sum(log(mvtnorm::dmvnorm(D, m, s)))

}

# This will fail with the error

# Error in checkForRemoteErrors(val) :

# 4 nodes produced errors; first error: could not find function "get_m"

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D,

# Multiple chains using parallel computing

multicore = TRUE,

nchains = 4

)

summary(ans)

# To solve the error, we need to build the cluster object

library(parallel)

cl <- makePSOCKcluster(4)

# Export the function `get_m` to the cluster. The function `fun` and data `D`

# are automatically exported by the `MCMC` function.

clusterExport(cl, "get_m")

# Run the MCMC

ans <- MCMC(

initial = c(mu0=5, mu1=5, s0=5, s01=0, s2=5),

fun,

kernel = kernel_normal_reflective(

lb = c(-10, -10, .01, -5, .01),

ub = 5,

scale = 0.01

),

nsteps = 1e3,

thin = 10,

burnin = 5e2,

D = D,

# Multiple chains using parallel computing

multicore = TRUE,

nchains = 4,

# Use the cluster object

cl = cl

)

summary(ans)

}