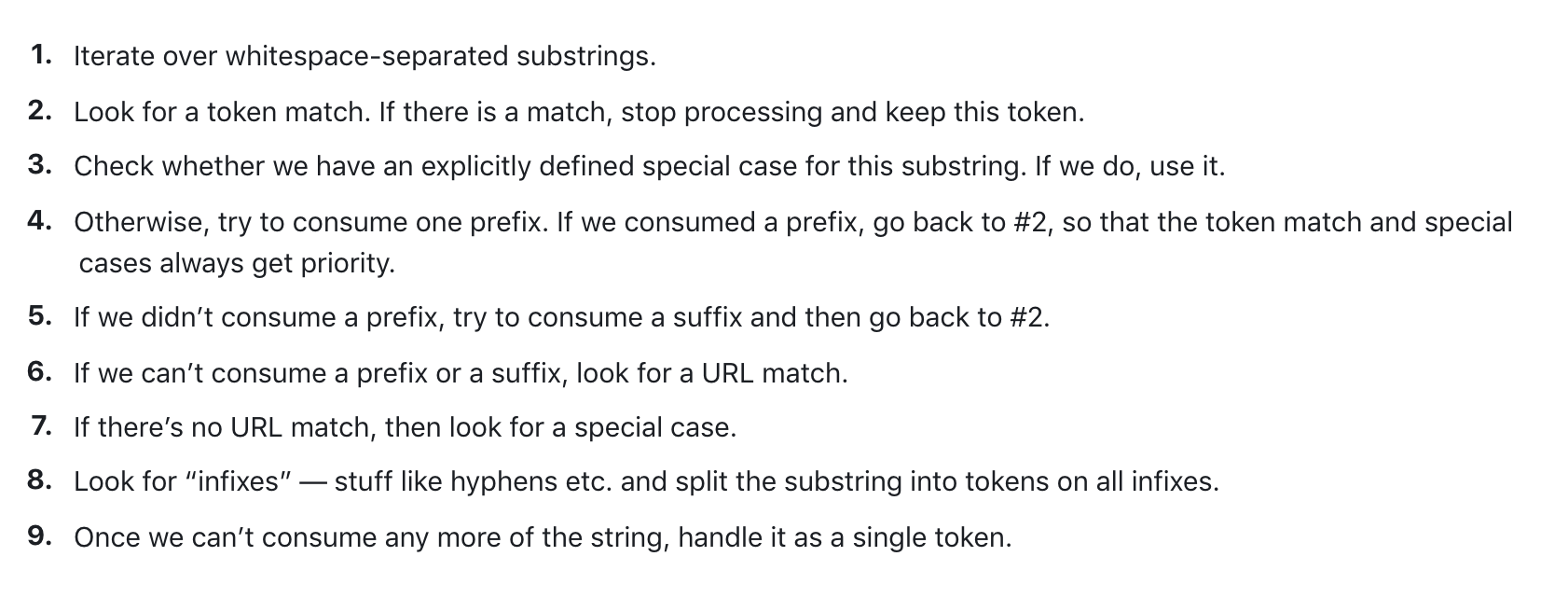

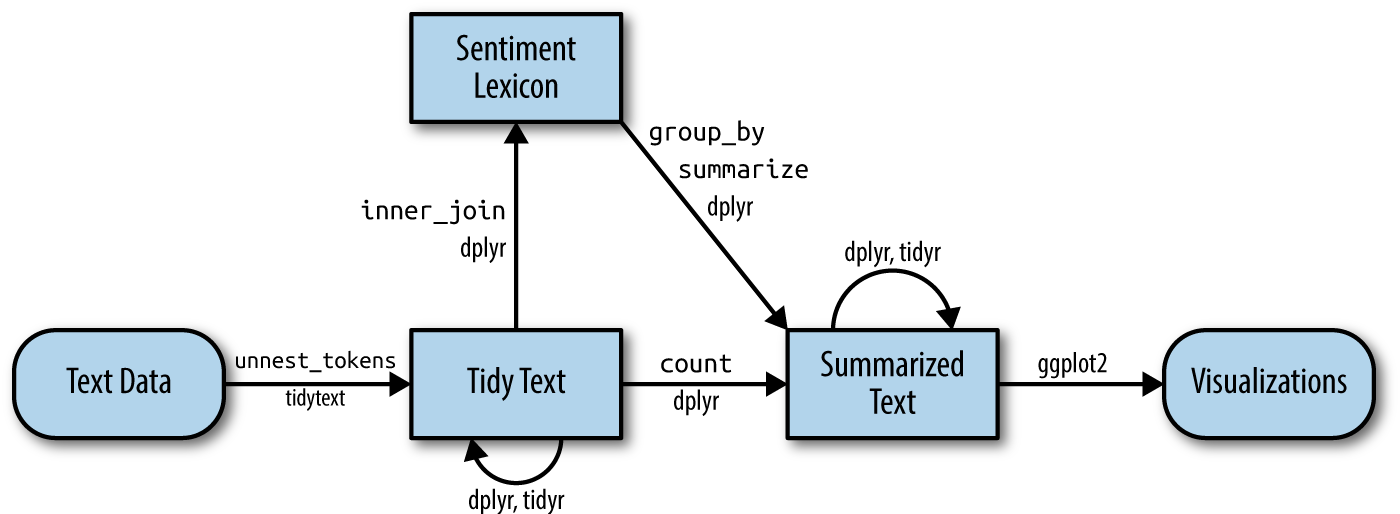

class: center, middle, title-slide .title[ # Text Mining ] .subtitle[ ## PM566 - Week 6 ] .author[ ### Emil Hvitfeldt and Kelly Street ] --- ``` ## [1] "theme.css" ``` <style type="text/css"> .orange {color: #EF8633} </style> ## Acknowledgment These slides were originally developed by Emil Hvitfeldt and modified by George G. Vega Yon. --- # Plan for the week - We will try to turn text into numbers - Then use tidy principals to explore those numbers ---  --- # Why tidytext? Works seemlessly with ggplot2, dplyr and tidyr. **Alternatives:** **R**: quanteda, tm, koRpus **Python**: nltk, Spacy, gensim --- # Alice's Adventures in Wonderland Download the alice dataset from https://USCBiostats.github.io/PM566/slides/06-textmining/alice.rds (or from [here](alice.rds)) ``` r library(tidyverse) alice <- readRDS("alice.rds") alice ``` ``` ## # A tibble: 3,351 × 3 ## text chapter chapter_name ## <chr> <int> <chr> ## 1 "CHAPTER I." 1 CHAPTER I. ## 2 "Down the Rabbit-Hole" 1 CHAPTER I. ## 3 "" 1 CHAPTER I. ## 4 "" 1 CHAPTER I. ## 5 "Alice was beginning to get very tired of sitting by he… 1 CHAPTER I. ## 6 "bank, and of having nothing to do: once or twice she h… 1 CHAPTER I. ## 7 "the book her sister was reading, but it had no picture… 1 CHAPTER I. ## 8 "conversations in it, “and what is the use of a book,” … 1 CHAPTER I. ## 9 "“without pictures or conversations?”" 1 CHAPTER I. ## 10 "" 1 CHAPTER I. ## # ℹ 3,341 more rows ``` --- # Tokenizing Turning text into smaller units -- In English: - split by spaces - more advanced algorithms --- # Spacy tokenizer  --- # Turning the data into a tidy format ``` r library(tidytext) alice |> unnest_tokens(token, text) ``` ``` ## # A tibble: 26,687 × 3 ## chapter chapter_name token ## <int> <chr> <chr> ## 1 1 CHAPTER I. chapter ## 2 1 CHAPTER I. i ## 3 1 CHAPTER I. down ## 4 1 CHAPTER I. the ## 5 1 CHAPTER I. rabbit ## 6 1 CHAPTER I. hole ## 7 1 CHAPTER I. alice ## 8 1 CHAPTER I. was ## 9 1 CHAPTER I. beginning ## 10 1 CHAPTER I. to ## # ℹ 26,677 more rows ``` --- # Words as a unit Now that we have words as the observation unit we can use the **dplyr** toolbox. --- # Using dplyr verbs .pull-left[ ``` r library(dplyr) alice |> unnest_tokens(token, text) ``` ] .pull-right[ ``` ## # A tibble: 26,687 × 3 ## chapter chapter_name token ## <int> <chr> <chr> ## 1 1 CHAPTER I. chapter ## 2 1 CHAPTER I. i ## 3 1 CHAPTER I. down ## 4 1 CHAPTER I. the ## 5 1 CHAPTER I. rabbit ## 6 1 CHAPTER I. hole ## 7 1 CHAPTER I. alice ## 8 1 CHAPTER I. was ## 9 1 CHAPTER I. beginning ## 10 1 CHAPTER I. to ## # ℹ 26,677 more rows ``` ] --- # Using dplyr verbs .pull-left[ ``` r library(dplyr) alice |> unnest_tokens(token, text) |> count(token) ``` ] .pull-right[ ``` ## # A tibble: 2,740 × 2 ## token n ## <chr> <int> ## 1 _alice’s 1 ## 2 _all 1 ## 3 _all_ 1 ## 4 _and 1 ## 5 _are_ 4 ## 6 _at 1 ## 7 _before 1 ## 8 _beg_ 1 ## 9 _began_ 1 ## 10 _best_ 2 ## # ℹ 2,730 more rows ``` ] --- # Using dplyr verbs .pull-left[ ``` r library(dplyr) alice |> unnest_tokens(token, text) |> count(token, sort = TRUE) ``` ] .pull-right[ ``` ## # A tibble: 2,740 × 2 ## token n ## <chr> <int> ## 1 the 1643 ## 2 and 871 ## 3 to 729 ## 4 a 632 ## 5 she 538 ## 6 it 527 ## 7 of 514 ## 8 said 460 ## 9 i 393 ## 10 alice 386 ## # ℹ 2,730 more rows ``` ] --- # Using dplyr verbs .pull-left[ ``` r library(dplyr) alice |> unnest_tokens(token, text) |> count(chapter, token) ``` ] .pull-right[ ``` ## # A tibble: 7,549 × 3 ## chapter token n ## <int> <chr> <int> ## 1 1 _curtseying_ 1 ## 2 1 _never_ 1 ## 3 1 _not_ 1 ## 4 1 _one_ 1 ## 5 1 _poison_ 1 ## 6 1 _that_ 1 ## 7 1 _through_ 1 ## 8 1 _took 1 ## 9 1 _very_ 4 ## 10 1 _was_ 1 ## # ℹ 7,539 more rows ``` ] --- # Using dplyr verbs .pull-left[ ``` r library(dplyr) alice |> unnest_tokens(token, text) |> group_by(chapter) |> count(token) |> top_n(10, n) ``` ] .pull-right[ ``` ## # A tibble: 122 × 3 ## # Groups: chapter [12] ## chapter token n ## <int> <chr> <int> ## 1 1 a 52 ## 2 1 alice 27 ## 3 1 and 65 ## 4 1 i 30 ## 5 1 it 62 ## 6 1 of 43 ## 7 1 she 79 ## 8 1 the 92 ## 9 1 to 75 ## 10 1 was 52 ## # ℹ 112 more rows ``` ] --- # Using dplyr verbs and ggplot2 .pull-left[ ``` r library(dplyr) library(ggplot2) alice |> unnest_tokens(token, text) |> count(token) |> top_n(10, n) |> ggplot(aes(n, token)) + geom_col() ``` ] .pull-right[ <img src="slides_files/figure-html/unnamed-chunk-11-1.png" width="700px" style="display: block; margin: auto;" /> ] --- # Using dplyr verbs and ggplot2 .pull-left[ ``` r library(dplyr) library(ggplot2) library(forcats) alice |> unnest_tokens(token, text) |> count(token) |> top_n(10, n) |> ggplot(aes(n, fct_reorder(token, n))) + geom_col() ``` ] .pull-right[ <img src="slides_files/figure-html/unnamed-chunk-12-1.png" width="700px" style="display: block; margin: auto;" /> ] --- # Stop words A lot of the words don't tell us very much. Words such as "the", "and", "at" and "for" appear a lot in English text but doesn't add much to the context. Words such as these are called **stop words** For more information about differences in stop words and when to remove them read this chapter https://smltar.com/stopwords --- ## Stop words in tidytext tidytext comes with a data.frame of stop words ``` r stop_words ``` ``` ## # A tibble: 1,149 × 2 ## word lexicon ## <chr> <chr> ## 1 a SMART ## 2 a's SMART ## 3 able SMART ## 4 about SMART ## 5 above SMART ## 6 according SMART ## 7 accordingly SMART ## 8 across SMART ## 9 actually SMART ## 10 after SMART ## # ℹ 1,139 more rows ``` --- # snowball stopwords ``` ## [1] "i" "me" "my" "myself" "we" ## [6] "our" "ours" "ourselves" "you" "your" ## [11] "yours" "yourself" "yourselves" "he" "him" ## [16] "his" "himself" "she" "her" "hers" ## [21] "herself" "it" "its" "itself" "they" ## [26] "them" "their" "theirs" "themselves" "what" ## [31] "which" "who" "whom" "this" "that" ## [36] "these" "those" "am" "is" "are" ## [41] "was" "were" "be" "been" "being" ## [46] "have" "has" "had" "having" "do" ## [51] "does" "did" "doing" "would" "should" ## [56] "could" "ought" "i'm" "you're" "he's" ## [61] "she's" "it's" "we're" "they're" "i've" ## [66] "you've" "we've" "they've" "i'd" "you'd" ## [71] "he'd" "she'd" "we'd" "they'd" "i'll" ## [76] "you'll" "he'll" "she'll" "we'll" "they'll" ## [81] "isn't" "aren't" "wasn't" "weren't" "hasn't" ## [86] "haven't" "hadn't" "doesn't" "don't" "didn't" ## [91] "won't" "wouldn't" "shan't" "shouldn't" "can't" ## [96] "cannot" "couldn't" "mustn't" "let's" "that's" ## [101] "who's" "what's" "here's" "there's" "when's" ## [106] "where's" "why's" "how's" "a" "an" ## [111] "the" "and" "but" "if" "or" ## [116] "because" "as" "until" "while" "of" ## [121] "at" "by" "for" "with" "about" ## [126] "against" "between" "into" "through" "during" ## [131] "before" "after" "above" "below" "to" ## [136] "from" "up" "down" "in" "out" ## [141] "on" "off" "over" "under" "again" ## [146] "further" "then" "once" "here" "there" ## [151] "when" "where" "why" "how" "all" ## [156] "any" "both" "each" "few" "more" ## [161] "most" "other" "some" "such" "no" ## [166] "nor" "not" "only" "own" "same" ## [171] "so" "than" "too" "very" ``` --- # funky stop words quiz #1 .pull-left[ - he's - she's - himself - herself ] --- # funky stop words quiz #1 .pull-left[ - he's - .orange[she's] - himself - herself ] .pull-right[ .orange[she's] doesn't appear in the SMART list ] --- # funky stop words quiz #2 .pull-left[ - owl - bee - fify - system1 ] --- # funky stop words quiz #2 .pull-left[ - owl - bee - .orange[fify] - system1 ] .pull-right[ .orange[fify] was left undetected for 3 years (2012 to 2015) in scikit-learn ] --- # funky stop words quiz #3 .pull-left[ - substantially - successfully - sufficiently - statistically ] --- # funky stop words quiz #3 .pull-left[ - substantially - successfully - sufficiently - .orange[statistically] ] .pull-right[ .orange[statistically] doesn't appear in the Stopwords ISO list ] --- ## Removing stopwords We can use an `anti_join()` to remove the tokens that also appear in the `stop_words` data.frame .pull-left[ ``` r alice |> unnest_tokens(token, text) |> anti_join(stop_words, by = c("token" = "word")) |> count(token, sort = TRUE) ``` ] .pull-right[ ``` ## # A tibble: 2,314 × 2 ## token n ## <chr> <int> ## 1 alice 386 ## 2 time 71 ## 3 queen 68 ## 4 king 61 ## 5 don’t 60 ## 6 it’s 57 ## 7 i’m 56 ## 8 mock 56 ## 9 turtle 56 ## 10 gryphon 55 ## # ℹ 2,304 more rows ``` ] --- ## Anti-join with same variable name .pull-left[ ``` r alice |> unnest_tokens(word, text) |> anti_join(stop_words, by = c("word")) |> count(word, sort = TRUE) ``` ] .pull-right[ ``` ## # A tibble: 2,314 × 2 ## word n ## <chr> <int> ## 1 alice 386 ## 2 time 71 ## 3 queen 68 ## 4 king 61 ## 5 don’t 60 ## 6 it’s 57 ## 7 i’m 56 ## 8 mock 56 ## 9 turtle 56 ## 10 gryphon 55 ## # ℹ 2,304 more rows ``` ] --- # Stop words removed .pull-left[ ``` r alice |> unnest_tokens(word, text) |> anti_join(stop_words, by = c("word")) |> count(word, sort = TRUE) |> top_n(10, n) |> ggplot(aes(n, fct_reorder(word, n))) + geom_col() ``` ] .pull-right[ <img src="slides_files/figure-html/unnamed-chunk-16-1.png" width="700px" style="display: block; margin: auto;" /> ] --- ## Which words appears together? **ngrams** are n consecutive word, we can count these to see what words appears together. -- - ngram with n = 1 are called unigrams: "which", "words", "appears", "together" - ngram with n = 2 are called bigrams: "which words", "words appears", "appears together" - ngram with n = 3 are called trigrams: "which words appears", "words appears together" --- ## Which words appears together? We can extract bigrams using `unnest_ngrams()` with `n = 2` ``` r alice |> unnest_ngrams(ngram, text, n = 2) ``` ``` ## # A tibble: 25,170 × 3 ## chapter chapter_name ngram ## <int> <chr> <chr> ## 1 1 CHAPTER I. chapter i ## 2 1 CHAPTER I. down the ## 3 1 CHAPTER I. the rabbit ## 4 1 CHAPTER I. rabbit hole ## 5 1 CHAPTER I. <NA> ## 6 1 CHAPTER I. <NA> ## 7 1 CHAPTER I. alice was ## 8 1 CHAPTER I. was beginning ## 9 1 CHAPTER I. beginning to ## 10 1 CHAPTER I. to get ## # ℹ 25,160 more rows ``` --- # Which words appears together? Tallying up the bi-grams still shows a lot of stop words but it able to pick up retationhips with patients ``` r alice |> unnest_ngrams(ngram, text, n = 2) |> count(ngram, sort = TRUE) ``` ``` ## # A tibble: 13,424 × 2 ## ngram n ## <chr> <int> ## 1 <NA> 951 ## 2 said the 206 ## 3 of the 130 ## 4 said alice 112 ## 5 in a 96 ## 6 and the 75 ## 7 in the 75 ## 8 it was 72 ## 9 to the 68 ## 10 the queen 60 ## # ℹ 13,414 more rows ``` --- # Which words appears together? ``` r alice |> unnest_ngrams(ngram, text, n = 2) |> separate(ngram, into = c("word1", "word2"), sep = " ") |> select(word1, word2) ``` ``` ## # A tibble: 25,170 × 2 ## word1 word2 ## <chr> <chr> ## 1 chapter i ## 2 down the ## 3 the rabbit ## 4 rabbit hole ## 5 <NA> <NA> ## 6 <NA> <NA> ## 7 alice was ## 8 was beginning ## 9 beginning to ## 10 to get ## # ℹ 25,160 more rows ``` --- ``` r alice |> unnest_ngrams(ngram, text, n = 2) |> separate(ngram, into = c("word1", "word2"), sep = " ") |> select(word1, word2) |> filter(word1 == "alice") ``` ``` ## # A tibble: 336 × 2 ## word1 word2 ## <chr> <chr> ## 1 alice was ## 2 alice think ## 3 alice started ## 4 alice after ## 5 alice had ## 6 alice to ## 7 alice had ## 8 alice had ## 9 alice soon ## 10 alice began ## # ℹ 326 more rows ``` --- ``` r alice |> unnest_ngrams(ngram, text, n = 2) |> separate(ngram, into = c("word1", "word2"), sep = " ") |> select(word1, word2) |> filter(word1 == "alice") |> count(word2, sort = TRUE) ``` ``` ## # A tibble: 133 × 2 ## word2 n ## <chr> <int> ## 1 and 18 ## 2 was 17 ## 3 thought 12 ## 4 as 11 ## 5 said 11 ## 6 could 10 ## 7 had 10 ## 8 did 9 ## 9 in 9 ## 10 to 9 ## # ℹ 123 more rows ``` --- ``` r alice |> unnest_ngrams(ngram, text, n = 2) |> separate(ngram, into = c("word1", "word2"), sep = " ") |> select(word1, word2) |> filter(word2 == "alice") |> count(word1, sort = TRUE) ``` ``` ## # A tibble: 106 × 2 ## word1 n ## <chr> <int> ## 1 said 112 ## 2 thought 25 ## 3 to 22 ## 4 and 15 ## 5 poor 11 ## 6 cried 7 ## 7 at 6 ## 8 so 6 ## 9 that 5 ## 10 exclaimed 3 ## # ℹ 96 more rows ``` --- # TF-IDF TF: Term frequency IDF: Inverse document frequency TF-IDF: product of TF and IDF TF gives weight to terms that appear a lot, IDF gives weight to terms that appears in a few documents --- # TF-IDF with tidytext .pull-left[ ``` r alice |> unnest_tokens(text, text) ``` ] .pull-right[ ``` ## # A tibble: 26,687 × 3 ## text chapter chapter_name ## <chr> <int> <chr> ## 1 chapter 1 CHAPTER I. ## 2 i 1 CHAPTER I. ## 3 down 1 CHAPTER I. ## 4 the 1 CHAPTER I. ## 5 rabbit 1 CHAPTER I. ## 6 hole 1 CHAPTER I. ## 7 alice 1 CHAPTER I. ## 8 was 1 CHAPTER I. ## 9 beginning 1 CHAPTER I. ## 10 to 1 CHAPTER I. ## # ℹ 26,677 more rows ``` ] --- # TF-IDF with tidytext .pull-left[ ``` r alice |> unnest_tokens(text, text) |> count(text, chapter) ``` ] .pull-right[ ``` ## # A tibble: 7,549 × 3 ## text chapter n ## <chr> <int> <int> ## 1 _alice’s 2 1 ## 2 _all 12 1 ## 3 _all_ 12 1 ## 4 _and 9 1 ## 5 _are_ 4 1 ## 6 _are_ 6 1 ## 7 _are_ 8 1 ## 8 _are_ 9 1 ## 9 _at 9 1 ## 10 _before 12 1 ## # ℹ 7,539 more rows ``` ] --- # TF-IDF with tidytext .pull-left[ ``` r alice |> unnest_tokens(text, text) |> count(text, chapter) |> bind_tf_idf(text, chapter, n) ``` ] .pull-right[ ``` ## # A tibble: 7,549 × 6 ## text chapter n tf idf tf_idf ## <chr> <int> <int> <dbl> <dbl> <dbl> ## 1 _alice’s 2 1 0.000471 2.48 0.00117 ## 2 _all 12 1 0.000468 2.48 0.00116 ## 3 _all_ 12 1 0.000468 2.48 0.00116 ## 4 _and 9 1 0.000435 2.48 0.00108 ## 5 _are_ 4 1 0.000375 1.10 0.000411 ## 6 _are_ 6 1 0.000382 1.10 0.000420 ## 7 _are_ 8 1 0.000400 1.10 0.000439 ## 8 _are_ 9 1 0.000435 1.10 0.000478 ## 9 _at 9 1 0.000435 2.48 0.00108 ## 10 _before 12 1 0.000468 2.48 0.00116 ## # ℹ 7,539 more rows ``` ] --- # TF-IDF with tidytext .pull-left[ ``` r alice |> unnest_tokens(text, text) |> count(text, chapter) |> bind_tf_idf(text, chapter, n) |> arrange(desc(tf_idf)) ``` ] .pull-right[ ``` ## # A tibble: 7,549 × 6 ## text chapter n tf idf tf_idf ## <chr> <int> <int> <dbl> <dbl> <dbl> ## 1 dormouse 7 26 0.0112 1.79 0.0201 ## 2 hatter 7 32 0.0138 1.39 0.0191 ## 3 mock 10 28 0.0136 1.39 0.0189 ## 4 turtle 10 28 0.0136 1.39 0.0189 ## 5 gryphon 10 31 0.0151 1.10 0.0166 ## 6 turtle 9 27 0.0117 1.39 0.0163 ## 7 caterpillar 5 25 0.0115 1.39 0.0159 ## 8 dance 10 13 0.00632 2.48 0.0157 ## 9 mock 9 26 0.0113 1.39 0.0157 ## 10 hatter 11 21 0.0110 1.39 0.0153 ## # ℹ 7,539 more rows ``` ] --- # Sentiment Analysis Also known as "opinion mining," sentiment analysis is a way in which we can use computers to attempt to understand the feelings conveyed by a piece of text. This generally relies on a large, human-compiled database of words with known associations such as "positive" and "negative" or specific feelings like "joy", "surprise", "disgust", etc. --- # Sentiment Analysis  --- # Sentiment Lexicons The `tidytext` and `textdata` packages provide access to three different databases of words and their associated sentiments (known as "sentiment lexicons"). Obviously, none of these can be perfect, as there is no "correct" way to quantify feelings, but they all attempt to capture different elements of how a text makes you feel. The readily available lexicons are: - `afinn` from [Finn Årup Nielsen](http://www2.imm.dtu.dk/pubdb/views/publication_details.php?id=6010) - `bing` from [Bing Liu and collaborators](https://www.cs.uic.edu/~liub/FBS/sentiment-analysis.html) - `nrc` from [Saif Mohammad and Peter Turney](https://saifmohammad.com/WebPages/NRC-Emotion-Lexicon.htm) --- # Sentiment Lexicons - `bing` The `bing` lexicon contains a large list of words and a binary association, either "positive" or "negative": ``` r get_sentiments('bing') ``` ``` ## # A tibble: 6,786 × 2 ## word sentiment ## <chr> <chr> ## 1 2-faces negative ## 2 abnormal negative ## 3 abolish negative ## 4 abominable negative ## 5 abominably negative ## 6 abominate negative ## 7 abomination negative ## 8 abort negative ## 9 aborted negative ## 10 aborts negative ## # ℹ 6,776 more rows ``` --- # Sentiment Lexicons - `afinn` The `afinn` lexicon goes slightly further, assigning words a value between -5 and 5 that represents their positivity or negativity. ``` r get_sentiments('afinn') ``` ``` ## # A tibble: 2,477 × 2 ## word value ## <chr> <dbl> ## 1 abandon -2 ## 2 abandoned -2 ## 3 abandons -2 ## 4 abducted -2 ## 5 abduction -2 ## 6 abductions -2 ## 7 abhor -3 ## 8 abhorred -3 ## 9 abhorrent -3 ## 10 abhors -3 ## # ℹ 2,467 more rows ``` --- # Sentiment Lexicons - `nrc` The `nrc` lexicon takes a different approach and assigns each word an associated sentiment. Some words appear more than once because they have multiple associations: ``` r get_sentiments('nrc') ``` ``` ## # A tibble: 13,872 × 2 ## word sentiment ## <chr> <chr> ## 1 abacus trust ## 2 abandon fear ## 3 abandon negative ## 4 abandon sadness ## 5 abandoned anger ## 6 abandoned fear ## 7 abandoned negative ## 8 abandoned sadness ## 9 abandonment anger ## 10 abandonment fear ## # ℹ 13,862 more rows ``` --- # Sentiment Analysis We can use one of these databases to analyze _Alice's Adventures in Wonderland_ by breaking the text down into words and combining the result with a lexicon. Let's use `bing` to assign "positive" and "negative" labels to as many words as possible in the book. (Note that this time the variable created by `unnest_tokens` is called `word`, to match the column name in `bing`). ``` r alice |> unnest_tokens(word, text) |> inner_join(get_sentiments("bing")) ``` ``` ## Joining with `by = join_by(word)` ``` ``` ## # A tibble: 1,409 × 4 ## chapter chapter_name word sentiment ## <int> <chr> <chr> <chr> ## 1 1 CHAPTER I. tired negative ## 2 1 CHAPTER I. well positive ## 3 1 CHAPTER I. hot positive ## 4 1 CHAPTER I. stupid negative ## 5 1 CHAPTER I. pleasure positive ## 6 1 CHAPTER I. worth positive ## 7 1 CHAPTER I. trouble negative ## 8 1 CHAPTER I. remarkable positive ## 9 1 CHAPTER I. burning negative ## 10 1 CHAPTER I. fortunately positive ## # ℹ 1,399 more rows ``` --- # Sentiment Analysis We can now group and summarize this new dataset the same as any other. For example, let's look at the sentiment by chapter. We'll do this by counting the number of "positive" words and subtracting the number of "negative" words: ``` r diff_by_chap <- alice |> unnest_tokens(word, text) |> inner_join(get_sentiments("bing")) |> group_by(chapter) |> summarise(sentiment = sum(sentiment == "positive") - sum(sentiment == "negative")) ``` ``` ## Joining with `by = join_by(word)` ``` --- # Sentiment Analysis ``` r barplot(diff_by_chap$sentiment, names.arg = diff_by_chap$chapter) ``` <img src="slides_files/figure-html/unnamed-chunk-32-1.png" width="700px" style="display: block; margin: auto;" /> --- # Sentiment Analysis Alternatively, we could use the `afinn` lexicon and quantify the "sentiment" of each chapter by the average of all words with numeric associations: ``` r avg_by_chap <- alice |> unnest_tokens(word, text) |> inner_join(get_sentiments("afinn")) |> group_by(chapter) |> summarise(sentiment = mean(value)) ``` ``` ## Joining with `by = join_by(word)` ``` --- # Sentiment Analysis ``` r barplot(avg_by_chap$sentiment, names.arg = avg_by_chap$chapter) ``` <img src="slides_files/figure-html/unnamed-chunk-34-1.png" width="700px" style="display: block; margin: auto;" /> --- # Sentiment Analysis Similarly, we can find the most frequent sentiment association in the `nrc` lexicon for each chapter. Unfortunately, for all chapters, the most frequent sentiment association ends up being the rather bland "positive" or "negative": ``` r alice |> unnest_tokens(word, text) |> inner_join(get_sentiments("nrc")) |> group_by(chapter) |> summarise(sentiment = names(which.max(table(sentiment)))) ``` ``` ## Joining with `by = join_by(word)` ``` ``` ## # A tibble: 12 × 2 ## chapter sentiment ## <int> <chr> ## 1 1 positive ## 2 2 positive ## 3 3 positive ## 4 4 positive ## 5 5 positive ## 6 6 negative ## 7 7 positive ## 8 8 positive ## 9 9 positive ## 10 10 positive ## 11 11 positive ## 12 12 positive ``` --- # Sentiment Analysis We'll try to spice things up by removing "positive" and "negative" from the `nrc` lexicon: ``` r nrc_fun <- get_sentiments("nrc") nrc_fun <- nrc_fun[!nrc_fun$sentiment %in% c("positive","negative"), ] ``` --- # Sentiment Analysis Now we see a lot of "anticipation": ``` r alice |> unnest_tokens(word, text) |> inner_join(nrc_fun) |> group_by(chapter) |> summarise(sentiment = names(which.max(table(sentiment)))) ``` ``` ## Joining with `by = join_by(word)` ``` ``` ## # A tibble: 12 × 2 ## chapter sentiment ## <int> <chr> ## 1 1 anticipation ## 2 2 anticipation ## 3 3 sadness ## 4 4 anticipation ## 5 5 trust ## 6 6 anticipation ## 7 7 anticipation ## 8 8 anticipation ## 9 9 trust ## 10 10 joy ## 11 11 anticipation ## 12 12 trust ```